Shih-Chieh Dai (戴士捷)

Salt Lake City, UT

I am a first-year PhD student at the Kahlert School of Computing, the University of Utah. I am working on Machine Learning Security advised by Prof. Guanhong Tao. I completed my M.S. in Information Science at the University of Texas at Austin. My research interests are primarily in Code LLM Security and NLP Applications.

I started my NLP journey as an NLP research assistant in Natural Language Processing and Sentiment Analysis (NLPSA) Lab at Academia Sinica supervised by Dr. Lun-Wei Ku.

My research at NLPSA included Fake News Intervention, such as Reinforcement Learning for verified news recommendation and fact-checked explanation generation.

News

A Comprehensive Study of LLM Secure Code Generation

Shih-Chieh Dai, Jun Xu,and Guanhong Tao

Under Review Preprint

[Paper] [Code]

LLM-in-the-loop: Leveraging Large Language Model for Thematic Analysis

Shih-Chieh Dai, Aiping Xiong,and Lun-Wei Ku

In: Findings of the Conference on Empirical Methods in Natural Language Processing EMNLP 2023 Findings Short

[Paper]

Is Explanation the Cure? Misinformation Mitigation in the Short-term and Long-term

Yi-Li Hsu, Shih-Chieh Dai, Aiping Xiong,and Lun-Wei Ku

In: Findings of the Conference on Empirical Methods in Natural Language Processing EMNLP 2023 Findings Short

[Paper]

Ask to Know More: Generating Counterfactual Explanations for Fake Claims

Shih-Chieh Dai*, Yi-Li Hsu*, Aiping Xiong,and Lun-Wei Ku (*Equal contribution)

In: ACM SIGKDD Conference on Knowledge Discovery and Data Mining KDD 2022 Oral Presentation

[Paper]

VICTOR: An Implicit Approach to Mitigate Misinformation via Continuous Verification Reading

Kuan-Chieh Lo, Shih-Chieh Dai, Aiping Xiong,and Lun-Wei Ku

In: ACM The Web Conference WWW 2022 Oral Presentation

[Paper]

Escape from An Echo Chamber

Shih-Chieh Dai*, Kuan-Chieh Lo*, Aiping Xiong, Jing Jiang,and Lun-Wei Ku (*Equal contribution)

In: ACM The Web Conference WWW 2021 Demonstration

[Paper] [video]

All the Wiser: Fake News Intervention Using User Reading Preferences

Shih-Chieh Dai*, Kuan-Chieh Lo*, Aiping Xiong, Jing Jiang,and Lun-Wei Ku (*Equal contribution)

In: ACM International Conference on Web Search and Data Mining WSDM 2021 Demonstration

[Paper] [video]

Exam Keeper: Detecting Questions with Easy-to-Find Answers

Shih-Chieh Dai, Ting-Lun Hsu*,and Lun-Wei Ku (*Equal contribution)

In: ACM The Web Conference WWW 2019 Demonstration

[Paper] [video]

Upstream, Downstream or Competitor? Detecting Company Relations for Commercial Activities

Yi-Pei Chen, Ting-Lun Hsu, Wen-Kai Chung, Shih-Chieh Dai, Lun-Wei Ku

In: International Conference on Human-Computer Interaction HCII 2019 Oral Presentation

[Paper]

Penn State University & NLPSA, Academia Sinica

Advisor: Dr. Aiping Xiong & Lun-Wei Ku

Artificial Intelligence and Human-Centered Computing Lab, UT-Austin

Advisor: Dr. Matt Lease

Natural Language Processing and Sentiment Analysis Lab, Academia Sinica

Advisor: Dr. Lun-Wei Ku

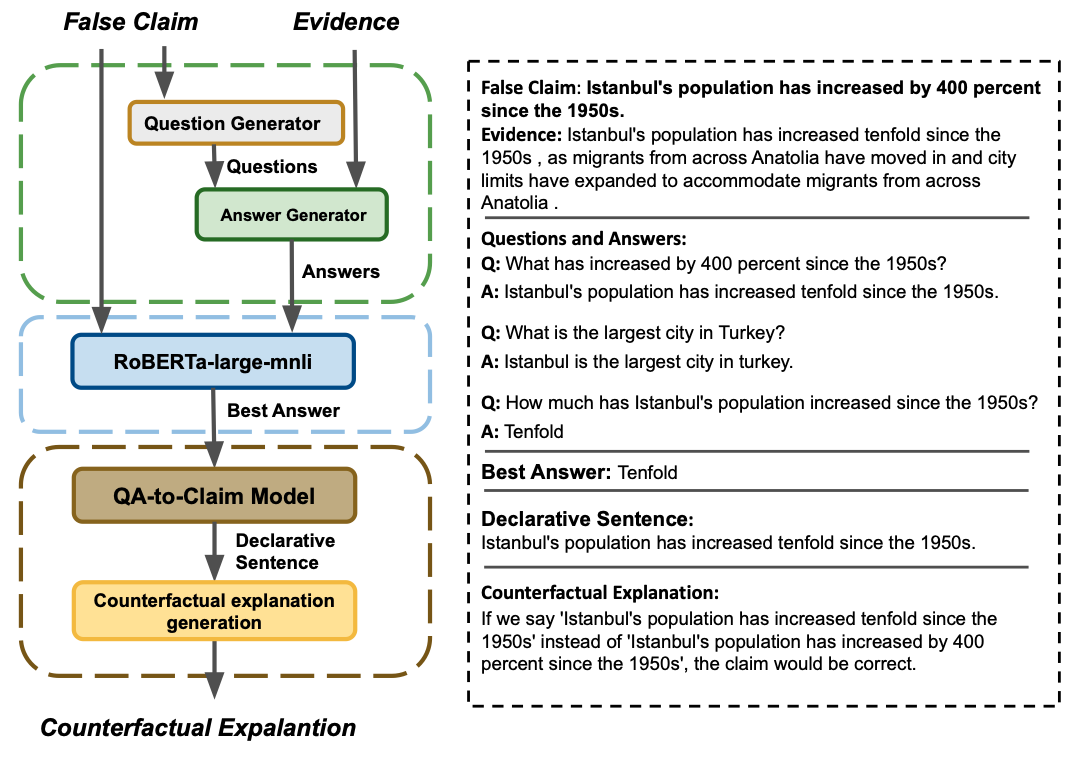

Generating Counterfactual Explanations for Fake Claims

Automated fact-checking systems have been proposed that quickly provide veracity prediction at scale to mitigate the negative influ- ence of fake news on people and on public opinion. However, most studies focus on veracity classifiers of those systems, which merely predict the truthfulness of news articles. We posit that effective fact checking also relies on people’s understanding of the predictions. We propose elucidating fact-checking predictions using counterfactual explanations to help people understand why a specific piece of news was identified as fake. In this work, generating counterfactual explanations for fake news involves three steps: asking good questions, finding contradictions, and reasoning appropriately. We frame this research question as contradicted entailment reasoning through question answering (QA).

[Paper] [Poster] [Code]

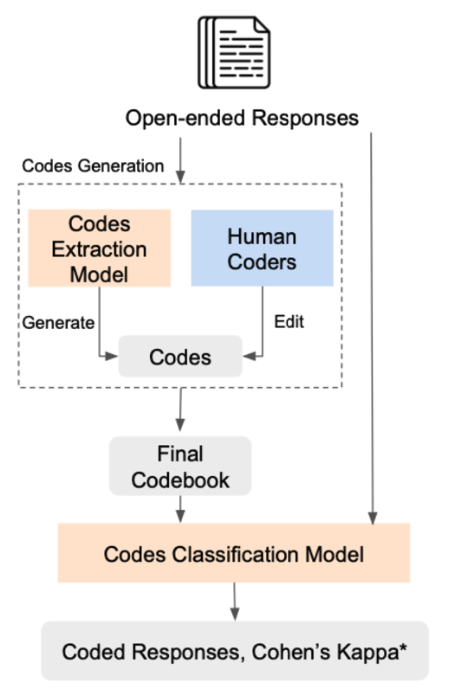

Automating Response Evaluation: A Tool for Automatic Coding Responses to Open-ended Questions

The open-ended question is a widely used method in human-subject surveys. Open-ended questions provide opportunities for discovering human subjects’ spontaneous responses to the questions. However, it is labor-intensive and time- consuming to analyze the responses. In light of the challenge, I aim to leverage NLP methods to create a tool for assisting the analysis of open-ended questions. (UT Master's Capstone Project)

[Poster] [Code]

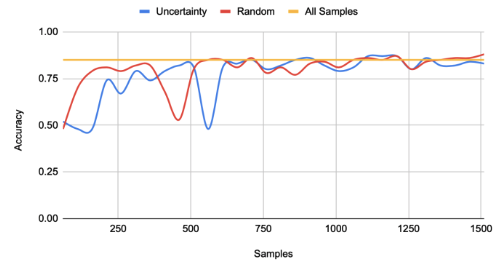

Detecting Political Ideological Leaning

This project aims

to design a human-in-the-loop classification using

active learning and rationales. The concept here

is to build a framework that predicts the political

ideology leans by the rationale provided by humans

or extracted by the machine learning model.

My parts in this project are running the ERASER

benchmark Bert2Bert model, trying active learning

with uncertainty-based query strategy and random

sampling, and implementing the random sampling baseline using Hugging Face (UT Master's Independent Study)

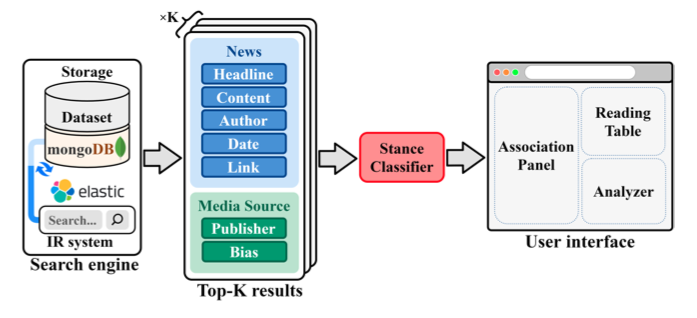

Escape from An Echo Chamber

An echo chamber effect refers to the phenomena that online users revealed selective exposure and ideological segregation on political issues. Prior studies indicate the connection between the spread of misinformation and online echo chambers. In this paper, to help users escape from an echo chamber, we propose a novel news-analysis platform that provides a panoramic view of stances towards a particular event from different news media sources. Moreover, to help users better recognize the stances of news sources which published these news articles, we adopt a news stance classification model to categorize their stances into “agree”, “disagree”, “discuss”, or “unrelated” to a relevant claim for specified events with political stances.

[Paper] [video]

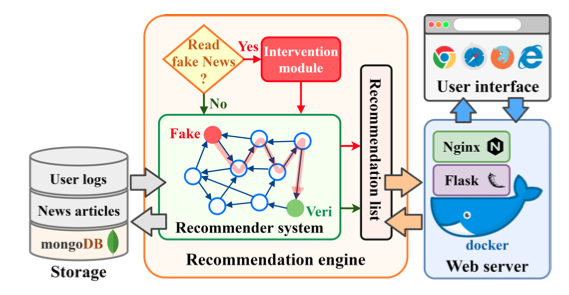

All the Wiser: Fake News Intervention Using User Reading Preferences

To address the increasingly significant issue of fake news, we develop a news reading platform in which we propose an implicit approach to reduce people’s belief in fake news. Specifically, we leverage reinforcement learning to learn an intervention module on top of a recommender system (RS) such that the module is activated to replace RS to recommend news toward the verification once users touch the fake news. To examine the effect of the proposed method, we conduct a comprehensive evaluation with 89 human subjects and check the effective rate of change in belief but without their other limitations. Moreover, 84% participants indicate the proposed platform can help them defeat fake news.

[Paper] [video]

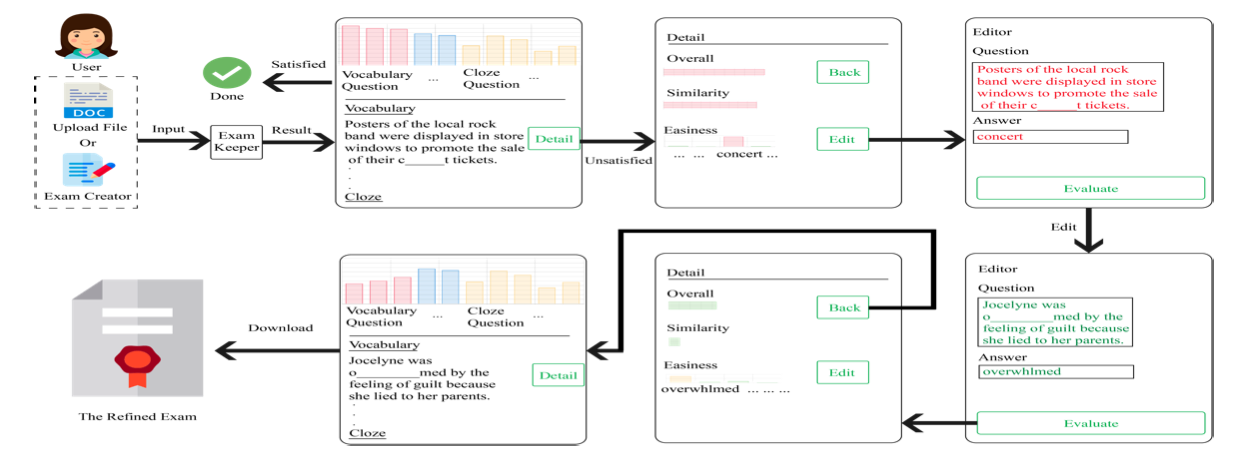

Exam Keeper: Detecting Questions with Easy-to-Find Answers

We present Exam Keeper, a tool to measure the availability of answers to exam questions for ESL students. Exam Keeper targets two major sources of answers: the web, and apps. ESL teachers can use it to estimate which questions are easily answered by information on the web or by using automatic question answering systems, which should help teachers avoid such questions on their exams or homework to prevent students from misusing technology.

[Paper] [video]

Graduation Project Competition Second Place

Project:MindReader-A Micro-moment Recommendation Mechanism API

Advisor: Prof. Yi-Ling Lin

NCCU, Taiwan, 2019

Creative Software Applications Contest Excellent Work Honorable Mention

Project:CHACHA:An App can recommend a drink for you

Advisor: Prof. Fang Yu

Ministry of Education, Taiwan, 2018